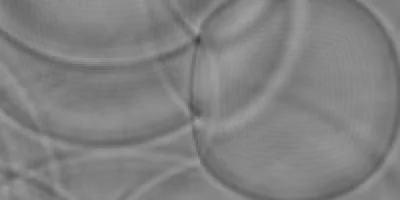

Simulation of waves by solving the 2D wave equation using finite difference method.

Automated web page generation with OpenAI's GPT-3

Image generated by DALL-E. Promptly suggested by Chat-GPT as cover image for this article: "Draw an image of a robot working at a lab bench, surrounded by books and papers. The robot is holding a tablet or laptop computer. In the background, there is a whiteboard with scientific equations and diagrams."

Image generated by DALL-E. Promptly suggested by Chat-GPT as cover image for this article: "Draw an image of a robot working at a lab bench, surrounded by books and papers. The robot is holding a tablet or laptop computer. In the background, there is a whiteboard with scientific equations and diagrams."

In this article, I explain how to use artificial intelligence to automatically generate web pages on any topic, including images. To do this, we will make use of the GPT-3 language model. GPT-3 stands for "Generative Pre-training Transformer 3." It is a neural network model for machine learning developed by OpenAI. As a generative model, it is capable of creating new content rather than just categorizing or classifying existing content. GPT-3 is particularly good at creating text that is human-like in style and content. It can also be used for tasks such as translation, question-answering systems, or summary writing. GPT-3 is currently one of the most powerful and versatile neural network models.

Unlike the newer ChatGpt, there is a programming interface for GPT-3. The Ghostwriter script is written in the Python programming language and will generate both text and images. It will not require any user input except for the topic. The topic can be formulated in any language known to GPT-3. However, it appears that it works better in English, the "native language" of the language model.

Running the script requires the presence of a recent version of Python. Open AI's GPT-3 is an artificial intelligence-based language model and a paid service. Therefore, using the Ghostwriter script requires the existence of a valid API key. One can currently register for free at OpenAI and has the possibility to use up a free credit of 18 Euros there. After that you have to pay for using the service. The costs for the creation of an article are in the low cent range (15-20 cents).

This script is not aimed at pupils or students who no longer want to write their homework themselves, although they could use it for that. Instead I would like to demonstrate how easy fully automatic text generation has become and how good the results are. The Ghostwriter script can be used to quickly check article ideas and it provides a useful starting point for further research on your own. For me, it also establishes a lower threshold of quality for an article. If a handwritten article is less informative or even on par with an automatically generated one, then you've done something wrong.

When it comes to topic selection the rule "garbage in, garbage out!" applies. If the topic is nonsense, the result will also be nonsense. For example, you can have an article written explaining how aliens ended the 100 year war. It will only be pretty bad, because the AI will get lost in the balancing act between actual history and fictional requirement. The quality of the results for German articles is about on the level of a school report. However, the quality of the generated English articles is better.

Examples of AI-generated articles:If you want to use the Ghostwriter script, you need an OpenAI API-Key. The Key must be stored in the environment variable "OPENAI_API_KEY". The use of GPT-3 is subject to a fee. Currently OpenAI offers a one-time credit of 18 Euro for registration, which can be used up. The costs for the creation of an article with images are in the cent range.

Download Ghostwriter-Skript via GitHub

For the execution you need Python 3. The script can be started with the following command line:

python ./ghostwriter.py -t "The Rise of AI generated Content" -tmpl ./template.html -o ai_content| Parameter | Description |

|---|---|

| -t | The subject of the web page. This text should be in quotes. |

| -tmpl TEMPLATE | A file (for example, HTML or PHP) that provides the framework for the web page. The file must contain the placeholders {TOPIC}, {CONTENT} and optionally {VERSION}. These will be automatically replaced by the script with the headline, HTML formatted article and script version number respectively. |

| -o OUTPUT | Output directory for the article. If this directory does not exist, a new one is created. |

| -s WRITING-STYLE | Optional: A text indicating the writing style. (e.g. "Carl Sagan", "National Geographic", "PBS" or "Drunken Pirate"). |

| -v | Optional: If this flag is set, the script prints the GPT-3 requests in the console. |

GPT-3 has limitations on both the complexity of its requests and its responses. The unit in which the system measures these are "tokens". A token is said to be roughly equivalent to four characters. The best language model "text-davinci-003" only allows a maximum of 4000 tokens for question and answer. Therefore it is not possible to generate a complete article with only one request.

For this reason, the problem must be broken down into subtasks that are sent to GPT one at a time. The Python code to send a single request is very simple.

def __create(self, prompt, temp = 0.3, freq_penalty = 0.3, pres_penalty = 0.2, max_tokens = 4000):

response = openai.Completion.create(

model = "text-davinci-003",

prompt = prompt,

temperature = temp,

max_tokens = max_tokens,

top_p = 1.0,

frequency_penalty = freq_penalty,

presence_penalty = pres_penalty

)

result = response["choices"][0]["text"]

result = result.strip()

return result

You send a request (prompt) and set some parameters. The parameter

max_tokens specifies how many tokens may be processed in a request.

This ensures that requests to the model do not become too long and that it can answer fast enough.

The frequency_penalty parameter affects the frequency of words in the text output generated by

GPT-3. The higher this value, the greater the penalty for repeated words with each new occurrence

of a word in the output text.

The presence_penalty parameter also controls how often word repetitions are allowed. In contrast to

the frequency_penalty, the presence_penalty does not depend on the frequency with which words appear

in previous predictions; it is always the same and takes effect as soon as the word has been used

at least once.

The temperature parameter controls how much influence randomness has in creating

an answer. If this value is 0, GPT will always answer the same.

The article starts with the generation of a table of contents. For this a command is sent to GPT-3, in which the AI is requested to create a table of contents for the article. It is further requested that the return should be in the form of a specially formatted XML file. This is done by specifying an XSD schema.

Create table of contents for an article titled "{topic}" with at least {nchapter} chapters;

Captions must be unnumbered; Output in XML with the XSD scheme listed below;

Caption attribute must consist of at least two words

<?xml version="1.0" encoding="utf-8"?>

<xs:schema attributeFormDefault="unqualified" elementFormDefault="qualified" xmlns:xs="http://www.w3.org/2001/XMLSchema">

...

It is worth noting that the language model understands this instruction. This means that GPT-3 must be familiar with XML and XSD file formats. I created the XSD schema with an online tool from an XML file that GPT-3 had created in an earlier run. The following XML file shows the output for an English language article on the "Paleocene–Eocene Thermal Maximum":

<?xml version="1.0" encoding="utf-8"?>

<outline>

<topic>Paleocene–Eocene Thermal Maximum>/topic>

<subtopic Caption="Background Information">

<subsubtopic Caption="Geological Context" />

<subsubtopic Caption="Climate Changes" />

</subtopic>

<subtopic Caption="Impacts on Ecosystems">

<subsubtopic Caption="Marine Ecosystems" />

<subsubtopic Caption="Terrestrial Ecosystems" />

</subtopic>

<subtopic Caption="Modern Implications">

<subsubtopic Caption="Global Warming" />

<subsubtopic Caption="Carbon Cycle" />

</subtopic>

</outline>Using the machine-readable table of contents it is now possible to create the article chapter by chapter including the images. This is done by simply iterating through the nodes of the XML file and requesting each chapter or subsection individually. There is no fixed format for requests to GPT-3. The following example creates a chapter introduction for an article about the Cambrian Explosion in HTML format.

Topic: Introduction / The Cambrian Explosion; Introductory; 100 Words; [Format:HTML;No heading;use <p> tags]

I had to explicitly tell the artificial intelligence here to use <p> tags in the output, as this was occasionally omitted. The prompts for subchapter creation look like this:

[Caption: The Cambrian Explosion][Topic: "Evolutionary Causes:Genetic Changes"][Format:HTML;No heading;use <p> tags][Write: min. 600 words]

I changed the format a little bit to make it shorter. The shorter the better, because you want to save tokens. However, you can't go completely without postprocessing, because in the answer GPT-3 will always explain in the first paragraph what the selected topic is all about. So in this example it would describe time and time again what the Cambrian Explosion actually was. It does not know that it has already done that in the previous subchapters. For this reason the first paragraph in the answer of GPT-3 is always ignored by the Ghostwriter script.

The generation of suitable images is also done by GPT-3. For this we have to ask the AI to create us a prompt describing an image that would illustrate the chapter well:

Create an DALL-E prompt in english for creating a photorealistic illustration for an article with this title:The Cambrian Explosion

Dall-E is an artificial intelligence from OpenAI for creating images from text input. We send the answer directly back to GPT-3 so that it creates this image for us:

Create a photorealistic illustration of a diverse range of animals from the Cambrian period, representing the sudden emergence of life during the Cambrian Explosion.

Presumably GPT-3 also uses DALL-E for image generation. The generated image can be downloaded from a URL using Python:

def create_image_from_prompt(self, prompt, save_path):

response = openai.Image.create(

prompt=prompt,

n=1,

size="512x512"

)

image_url = response['data'][0]['url']

response = requests.get(image_url)

image = Image.open(BytesIO(response.content))

image.save(save_path)The complete list of all requests necessary to create an article on the Cambrian explosion can be viewed here.

According to OpenAI, AI-generated texts can be identified by means of an introduced fingerprint. Something like this could be achieved by subtle changes in word frequencies or by using certain rarer phrases. What exactly OpenAI does is unknown. However, there are already AI models that specialize in detecting AI-generated text. One example of such a detector is the openai-openai-detector, a GPT-2 detector based on RoBERTa.

The cat and mouse game between AI speech systems and AI systems for detecting them has begun. So let's take a look at the capabilities of the openai-openai-detector.

My tests show that translating a machine-generated text using Deep-L into a foreign language, followed by translating it back, does not protect against detection. Even after translation into Chinese or German, the texts were still identified as products of GPT after being translated back into English.

The "drunken pirate" writing style seems to confuse the GPT-2 detector the most.

(Image created by Dall-E; Prompt: "Create an image of a Pirate sitting at a table writing an essay. On the table is a bottle of rum and a knocked over glass.")

The "drunken pirate" writing style seems to confuse the GPT-2 detector the most.

(Image created by Dall-E; Prompt: "Create an image of a Pirate sitting at a table writing an essay. On the table is a bottle of rum and a knocked over glass.")

If you explicitly request GPT-3 to create text with a specific writing style, then the recognition as machine generated text is made more difficult. For this you can use the "-s" option of the ghostwriter script. In the case of texts generated in English in the writing style of Carl Sagen, the detector was sure that the texts were authentic, even if the amount of text was large. However, if the sections are tested individually, the picture turns into the opposite.

This is not an effective protection against detection. The only writing style that reliably protected against detection was the "Drunken Pirate" writing style. So for what it's worth, articles written in the style of a drunken pirate should not be easily recognizable as an AI product. At least for the time being.

Finally, it should also be mentioned that the GPT-2 detector is prone to false detections. This seems to be especially true for texts written in simplified English. For example, the article on the Magnetic Pendulum in the simplified English Wikipedia is classified as an AI product. But this is impossible, because this article version is three years older than GPT. After a short search in this Wikipedia branch I could also find other false detections, for example the article Northern Territory in the version of 2014 or the article about Chlorite.

I think it is unlikely that this is a coincidence. It is conceivable that texts in simplified English and AI-generated texts have a similar structure. It is also possible that the language systems often use such simplified texts for training, because they are easier to understand. In this case, they would naturally replicate their structure in their output. So specifically for the OpenAI OpenAI detector, I can't say for sure if it is good at recognizing AI-generated texts or if it is good at recognizing texts in simplified English. There seems to be significant overlap there.